Creating an S3 VPC Endpoint

For more information on VPC, visit https://aws.amazon.com/vpc/

VPC Endpoint Description

This tutorial will walk through the steps required to create an S3 VPC Endpoint. A VPC endpoint allows EC2 instances the ability to talk to services that are configured behind a VPC endpoint without having to traverse the public internet. A prime use case for creating a VPC endpoint would be to allow EC2 instances access to S3 buckets via their private subnets. In this scenario, we will set up an EC2 instance that has no internet connectivity, but will have the ability to still send data to a configured S3 bucket via the VPC endpoint directly. Using a VPC endpoint allows the object to go direct between the EC2 instance and the S3 bucket internally, both saving egress and ingress cost, as well as allowing for faster and more performance for read/writes to/from the bucket because the traffic does not have to hairpin, or traverse a public hop. Once our setup is complete, our VPC should operate like the following diagram

VPC Endpoint Pre-Requisites

1. Active AWS Account:

You will need to have an active AWS account, as this lab will cover setting up a VPC endpoint within an AWS account.

VPC Endpoint Setup

To test out this scenario, we will assume the following steps have previously been taken:

1. Bastian Host EC2 Instance:

For this example we will launch and configure a standard bastion host in our VPC. This bastion host will have both a Public and Private IP address so that it can be accessed via traditional SSH on its public IP, as well as being able to talk to our application server via its private IP.

2. Application Host EC2 Instance:

We will also launch and configure a second instance in the same region, availability zone, and subnet as our bastion host for simplicity. This instance will have a Private IP address only. At the time of launch we ensure that we select disable for the option that gives the instance a public IP. If we need to attach to the instance for any configuration (such as putting the bastion hosts SSH Keys in the authorized_keys file), then we can simply provision an EIP (Elastic IP) and attach the EIP to the application instance. Post setup however, make sure that the EIP has been detached from the instance, and that the bastion host is able to SSH to the application instance via its private IP address.

3. AWS CLI:

In order to simulate an application attempting to push an object to an S3 bucket, we will simply install the AWS CLI tools on the application instance, and use the s3 copy command to try and copy a file from the application instance to an S3 bucket.

AWS CLI Tools:

If using the Amazon Linux AMI, this step is not required, as the Amazon Linux AMI already has the AWS CLI tools installed by default.__

pip install awscli

4. File to Push:

The last thing we need to ensure that we have is a file to push to the S3 bucket on the application instance. Any file will do, For this example, I have created a text file in the /home/ec2-user/ directory, that just contains the S3 service description straight off the AWS S3 website.

Application Instance shell via Bastian Host SSH Session:

echo "Companies today need the ability to simply and securely collect, store, and analyze their data at a massive scale. Amazon S3 is object storage built to store and retrieve any amount of data from anywhere – web sites and mobile apps, corporate applications, and data from IoT sensors or devices. It is designed to deliver 99.999999999% durability, and stores data for millions of applications used by market leaders in every industry. S3 provides comprehensive security and compliance capabilities that meet even the most stringent regulatory requirements. It gives customers flexibility in the way they manage data for cost optimization, access control, and compliance. S3 provides query-in-place functionality, allowing you to run powerful analytics directly on your data at rest in S3. And Amazon S3 is the most supported storage platform available, with the largest ecosystem of ISV solutions and systems integrator partners." > /home/ec2-user/testfile.txt

S3 Bucket Configuration

1. Create a Bucket:

Because our application server will need to presumably read and write objects to an S3 bucket, we will need to create a bucket for the application server to use. In order to do this, from the top left side of the navigational menu bar, click on the Services menu, and then choose S3 by either navigating to the  section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

Once in the S3 console, click the Create bucket button in order to create a new S3 bucket.

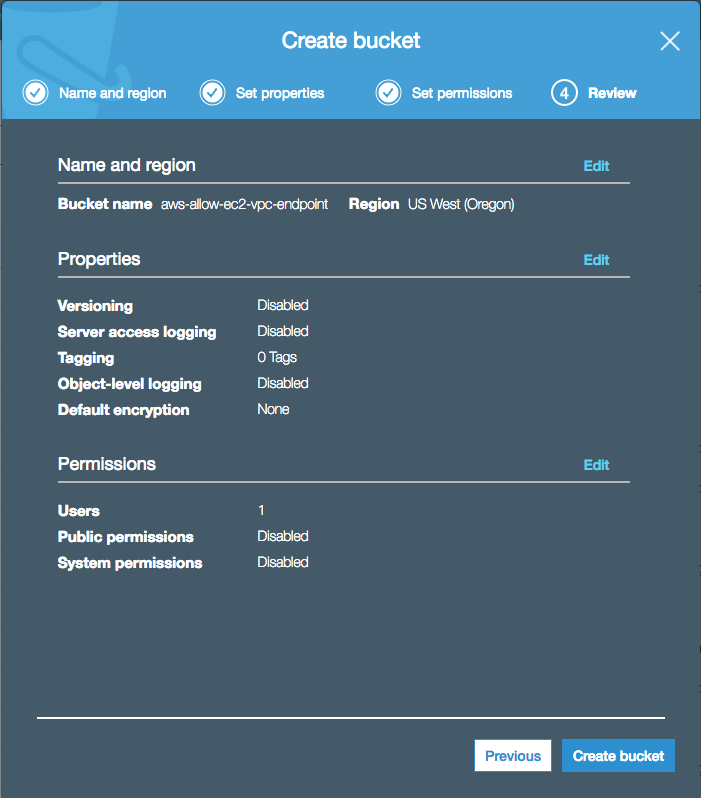

2. Bucket Configuration:

Next, the S3 Create bucket modal window will pop up, allowing us to set up and configure our S3 bucket. In the Name and region section, choose a bucket name, the region that the bucket will live in, and optionally copy any settings from existing buckets and then press the Next button.

Bucket Region:

You MUST ensure that the bucket that you are creating lives in the SAME region as the VPC that you will be enabling the endpoint for. If you create a bucket in a region other than the region that your application server lives in, then the application will NOT be able to access the bucket. VPC Endpoints are REGION SPECIFIC, and thus the bucket must live in the same region as the VPC Endpoint that we will be creating.

Next, in the Set properties dialog, choose and enable things such as versioning, access logging, tags, encryption etc.. and click Next. For the purpose of this exercise, we will leave all options default, and just proceed to the next section.

Next, in the Set permissions dialog, configure any permissions that the bucket should have. By default, the bucket will give the owner full permissions. Here we could add permissions for other users, other accounts, and change the public/private flag of the bucket. Again for the purpose of this exercise we will leave the default values, and click Next. We will configure our bucket permissions with a bucket policy in the next section.

Last, review the bucket configuration and click the Create bucket button.

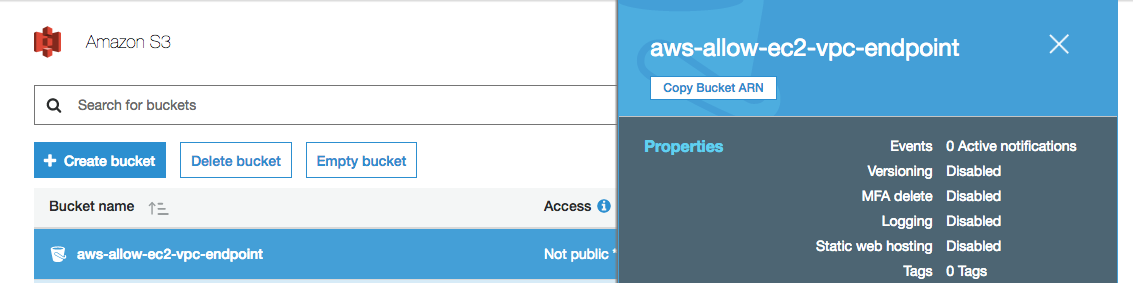

Once complete, you should now be able to look at your bucket list and see your newly created bucket.

Bucket Policy:

Our Bucket will still require a bucket policy, but prior to applying the policy, we must create a role that we can include in the bucket policy. So for now, we will leave our new bucket as is, and come back and configure the policy in a later step.

3. Grab the Bucket ARN:

Lastly, we will need to grab the ARN or Amazon Resource Name from the new bucket that will be used when creating our EC2 S3 Access role. To grab the ARN, simply click on the bucket somewhere in the whitespace, and when the property box of the bucket appears in a pop up modal, click the Copy Bucket ARN button to copy the bucket ARN to the clipboard.

S3 Access Policy

The next thing we will need to do is to create a custom policy for S3. Although we could use the existing AmazonS3FullAccess policy, allowing our application server full access to S3 is not the recommended nor best practice way of doing things. So in order to create a custom policy, we need to go to the IAM console. From the top left side of the navigational menu bar, click on the Services menu, and then choose IAM by either navigating to the  section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

Next, from the IAM menu on the left side of the screen, click Policies, and then click the Create policy button.

1. Create an S3 Custom Policy:

In the Visual editor tab, select S3 as the service, then under Actions, expand List and choose ListObjects, expand Read and choose GetObject, and finally expand Write and choose PutObject, and DeleteObject. Next under the Resources section, ensure that Specific is chosen, and then click the Add ARN link to expose the dialog to add the S3 Bucket ARN that we copied in an earlier step. In the dialog, paste the ARN into the Bucket name section, removing everything but the actual bucket name, and under Object name click the any box. Next click on Add to be returned to the Create policy window. For the purpose of this exercise we will not need to configure any request conditions, so simply click the Review policy* button.

2. Review the Policy:

Next, Review the policy, and click the Create policy button to complete the policy creation process.

3. Policy Created:

Now that we have our custom policy created, we can move to the next task of configuring a new role that will use this policy.

S3 Access Role

The next thing that we will need to do in order to be able to read and write data to Amazon S3 is to create a role that will allow our application instance the right to read and write to S3 via the custom policy that we just created.

1. Create New Role:

From the left side IAM menu, choose Roles, and then click on the Create role button.

2. Create EC2 S3 Role:

In the Create role view, ensure that you have selected AWS service from the top level choice list. Next, under the list of services, choose EC2, and then under the Select your use case section, again, select EC2. Finally click on the Next: Permissions button.

3. Select Custom Policy:

In the Attach permissions policies section, Start typing the name of the custom policy that was previoulsy created. Once it appears in the short list, choose the role, and click the Next: Review button.

Last, we simply need to type a name and description for our new role, and then click the Create role button.

4. Role Complete:

Our role should now be completed, and will be available in the list of available/assignable roles in the EC2 console. So now lets move on and assign our role.

Assign the Role

Now that we have our role set that will allow our EC2 application instance permissions to perform the specified actions against our S3 bucket, we now need to assign the role to the application instance. We do this from the EC2 dashboard. To navigate to the EC2 dashboard, From the top left side of the navigational menu bar, click on the Services menu, and then choose IAM by either navigating to the  section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

1. Know your role, EC2...:

From the list of available instances, choose the application server instance, and once selected, Click the Actions menu, scroll down to Instance Settings and choose Attach/Replace IAM Role from the list of available options.

Next, from the list of available IAM roles, choose the role that was just created, and then click Apply

Set S3 Bucket Policy

Now that we have our role created and assigned to our EC2 application server, the next step is to set up a bucket policy on the S3 bucket that we created earlier to allow the EC2 application instance with the new role assigned to it, permission to perform the required actions against our bucket. In order to this we must navigate back to the S3 console.

1. Bucket Policy:

From your S3 console, click on the bucket, and then from the bucket properties console, click on the Permissions tab, and then click Bucket Policy to access the policy editor view. Paste the following policy into the policy editor, and then click on Save.

Policy Substitutions:

Ensure that you substitute the {{ROLE_ARN}} below with the ARN of the role created in previous steps in the Principal section, as well as {{BUCKET_NAME}} in the Resource section with the actual bucket name that was also created in previous steps.

Policy Syntax:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "AWS": "{{ROLE_ARN}}" }, "Action": [ "s3:GetObject", "s3:PutObject", "s3:DeleteObject" ], "Resource": "arn:aws:s3:::{{BUCKET_NAME}}/*" } ] }

Policy Example:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::012345678910:role/CR-S3-LRWD-Object-CDBucketOnly" }, "Action": [ "s3:GetObject", "s3:PutObject", "s3:DeleteObject" ], "Resource": "arn:aws:s3:::aws-allow-ec2-vpc-endpoint/*" } ] }

POLICY:

This bucket policy will allow only the CR-S3-LRWD-Object-CDBucketOnly role, which is assumed by the EC2 service, the ability to GetObject, PutObject, and DeleteObject into the specified S3 bucket (aws-allow-ec2-vpc-endpoint). The function will not allow write or get to any other bucket, nor can any other user or role access this particular bucket.

Test S3 Access

Now that we have our environment complete, we can test a S3 write operation from the application instance to S3 to verify that it will fail. At this point, even though our policies, roles, and bucket policies are set up, when we use the AWS CLI S3 CP command, the default behavior of our EC2 application instance trying to write to S3 will attempt to hit the public S3 endpoint. This operation will fail because the application instance does not have any internet connectivity. In order to perform this test, SSH to the bastion host, then from the bastion host, SSH to our application server, and navigate to the home directory. From there we will attempt an S3 copy using the command line tool with the following s3 command:

aws --region us-west-2 s3 cp testfile.txt s3://aws-allow-ec2-vpc-endpoint/

Configure S3 VPC Endpoint from Console

Now that we have verified that our application instance is unable to externally contact the public S3 endpoints, we now need to set up an internal VPC endpoint that will allow our instance, the ability to communicate with the S3 endpoints via its private IP. To navigate to the VPC dashboard, From the top left side of the navigational menu bar, click on the Services menu, and then choose VPC by either navigating to the  section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

section of the listed services, or by typing the first few letters of the service name in the search box, and then choosing it from the filtered list.

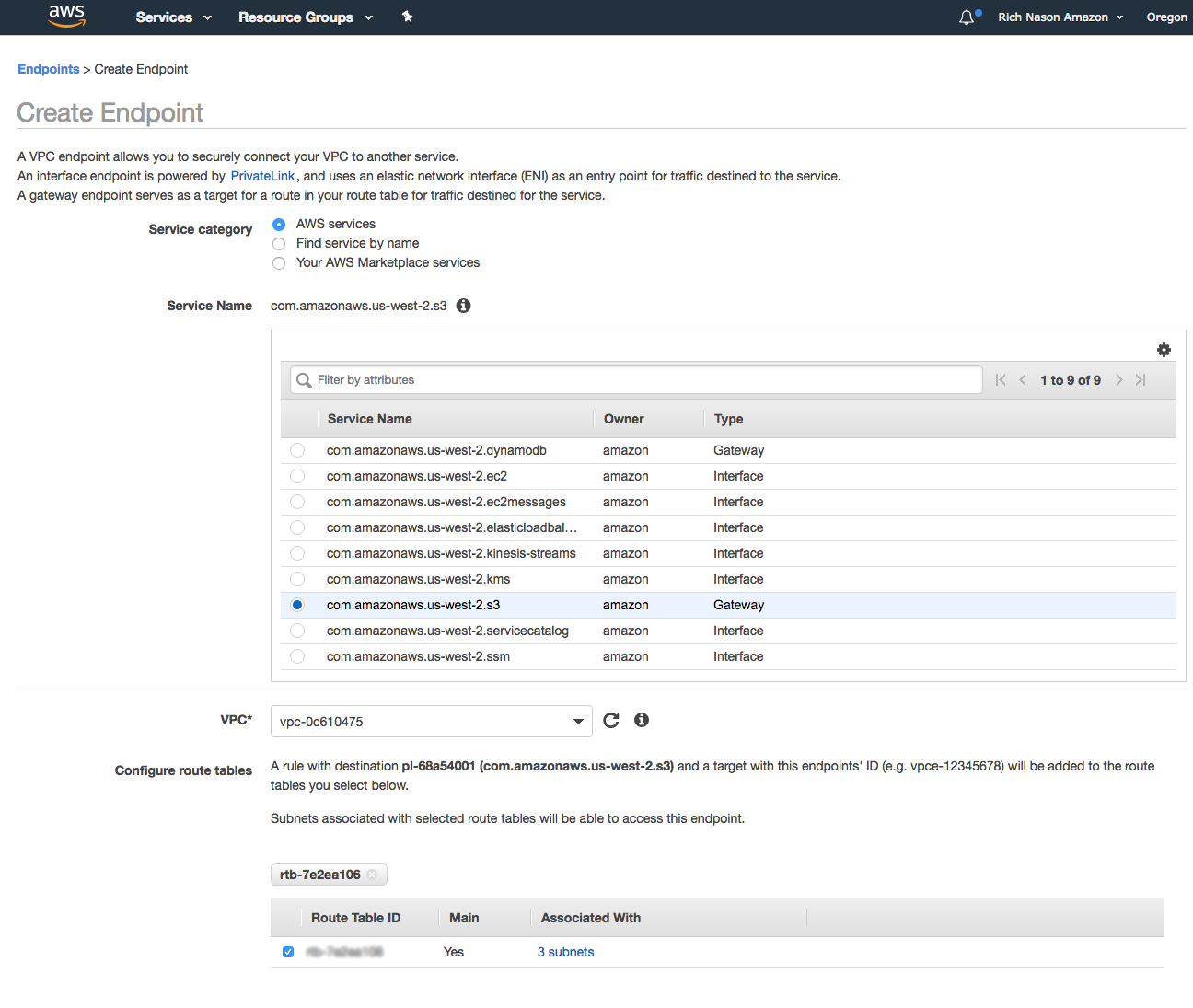

1. Create VPC Endpoint:

From the VPC console left menu, click on Endpoints, and then click the Create Endpoint button.

Next, in the Create Endpoint screen, from the available list, choose com.amazonaws.us-west-2.s3 from the Service Name section, next choose the proper VPC, and route table associated with the VPC that you are creating the endpoint for. Next under the policy section, click on Custom and use the following policy statement, then click the Create endpoint button.

{ "Statement": [ { "Action": ["s3:GetObject", "s3:PutObject","s3:DeleteObject"], "Effect": "Allow", "Resource": "arn:aws:s3:::aws-s3-endpoint-bucket/*", "Principal": "*" } ] }

Configure S3 VPC Endpoint from CLI

Because we love the CLI, lets also take a look at the way to perform the same task of creating the VPC endpoint from the AWS CLI. In order to do this, we will need 2 pieces of information, the first being the VPC-ID of the VPC that the endpoint will be created in, the second being the Route-Table-ID again from the main routing table in the VPC that will host the enpdoint.

1. Gather required information:

For the purpose of this excercise we will assume the following bits of information have been collected from our AWS account.

VPC-ID: vpc-a12345bc Routing Table ID: rtb-a1b2c3d4

2. Create VPC Endpoint via CLI:

Now that we have the 2 pieces of information that we need for the CLI to work, lets create the endpoint using the CLI.

Syntax:

aws ec2 create-vpc-endpoint --region us-east-2 --vpc-id {VPC-ID} --route-table-ids {Route-Table-ID} --service-name {Endpoint-Service-ID} --policy-document "{Access Policy JSON}"

Example Request:

aws ec2 create-vpc-endpoint --region us-east-2 --vpc-id vpc-a12345bc --route-table-ids rtb-a1b2c3d4 --service-name com.amazonaws.us-east-2.s3 --policy-document "{\"Statement\": [{\"Action\": [\"s3:GetObject\", \"s3:PutObject\",\"s3:DeleteObject\"],\"Effect\": \"Allow\",\"Resource\": \"arn:aws:s3:::aws-s3-endpoint-bucket/*\",\"Principal\": \"*\"}]}"

Example Response:

{ "VpcEndpoint": { "VpcEndpointId": "vpce-0acd56c2e7848b287", "VpcEndpointType": "Gateway", "VpcId": "vpc-a12345bc", "ServiceName": "com.amazonaws.us-east-2.s3", "State": "available", "PolicyDocument": "{\"Version\":\"2008-10-17\",\"Statement\":[{\"Effect\":\"Allow\",\"Principal\":\"*\",\"Action\":[\"s3:GetObject\",\"s3:PutObject\",\"s3:DeleteObject\"],\"Resource\":\"arn:aws:s3:::aws-s3-endpoint-bucket/*\"}]}", "RouteTableIds": [ "rtb-a1b2c3d4" ], "SubnetIds": [], "Groups": [], "PrivateDnsEnabled": false, "NetworkInterfaceIds": [], "DnsEntries": [], "CreationTimestamp": "2018-08-05T17:55:07Z" } }

Verify the VPC Endpoint

1. Verify the Endpoint:

Once the endpoint has been created, verify that the endpoint is now in the Endpoints console, and the status is set as available.

Next, we need to verify that the route to the VPC endpoint has been added properly to the routing table. To do this click on the Route Tables link in the left side VPC menu. Then, select the main routing table, and click on the Routes tab in the bottom portion of the console. Verify that the vpce (VPC Endpoint) route has been added to the routing table.

2. Endpoint Created !:

At this point, our endpoint has been completed, and we can now retest our application instance to verify connectivity.

Re-Test S3 Access

Now that we have our environment fully complete, we can re-test a S3 write operation from the application instance to S3 to verify that it will now succeed. At this point, our EC2 application instance will attempt to write to S3 via its private address routing the requests to S3's gateway endpoint within our own VPC, allowing it to be accessible from our private subnet within our VPC. In order to perform this test, SSH to the bastion host, then from the bastion host, SSH to our application server, and navigate to the home directory. From there we will attempt an S3 copy using the command line tool with the following s3 command:

aws --region us-west-2 s3 cp testfile.txt s3://aws-allow-ec2-vpc-endpoint/

Verify S3 Copy

Last, we will check our bucket to ensure that the upload was successful. Navigate back to the S3 Console, and click on the bucket where we wrote the file. There we should see our object sitting in the bucket.

Creating a VPC Endpoint with Cloudformation

CloudFormation Template to create VPC S3 Endpoint

The following Cloudformation template can be used to create an S3 VPC endpoint within your VPC.

AWSTemplateFormatVersion: '2010-09-09' Description: 'Add VPC Endpoint to S3 from private subnets' Parameters: VpcId: Description: The VPC ID of the VPC that this endpoint will be attached to Type: AWS::EC2::VPC::Id AllowedPattern: "[a-z0-9-]*" RouteTableId: Description: The Routing Table ID of the Routing Table that this endpoint will be added to Type: String AllowedPattern: "[a-z0-9-]*" Resources: S3Endpoint: Type: "AWS::EC2::VPCEndpoint" Properties: PolicyDocument: Version: 2012-10-17 Statement: - Effect: Allow Principal: '*' Action: - 's3:GetObject' - 's3:PutObject' - 's3:DeleteObject' Resource: - 'arn:aws:s3:::aws-allow-ec2-vpc-endpoint/*' RouteTableIds: - !Ref RouteTableId ServiceName: !Join - '' - - com.amazonaws. - !Ref 'AWS::Region' - .s3 VpcId: !Ref VpcId Outputs: StackName: Description: 'Stack name' Value: !Sub '${AWS::StackName}' Endpoint: Description: 'The VPC endpoint to S3.' Value: !Ref S3Endpoint

VPC Endpoint Additional Resources

No Additional Resources.

VPC Endpoint Site/Information References

VPC Endpoint Troubleshooting Doc

VPC Endpoint CLI Refernce Doc